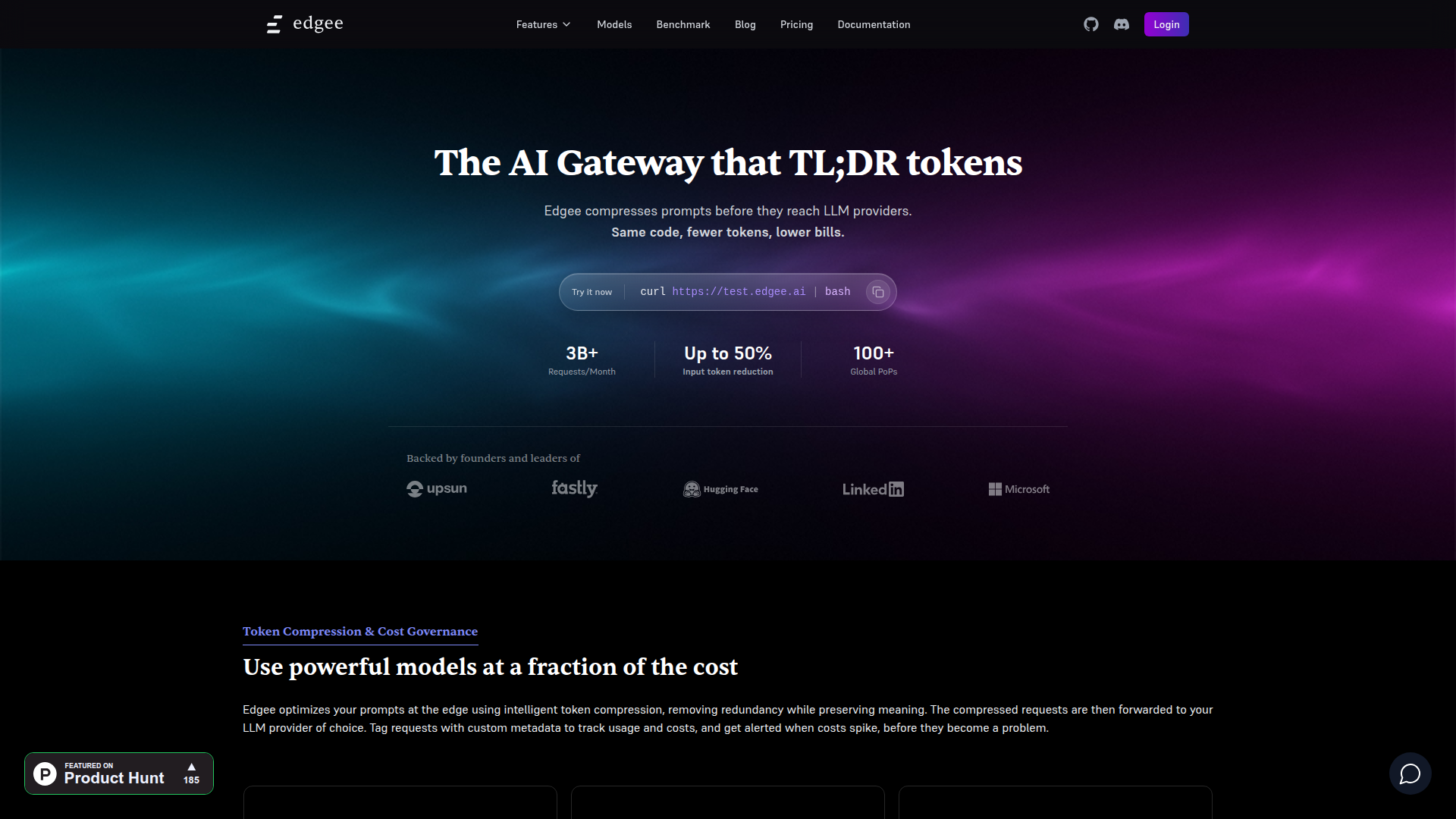

Edgee

A token compression and AI gateway tool

What is Edgee?

Edgee is an AI gateway that compresses prompts at the edge to cut LLM input tokens and costs while preserving meaning. It routes requests across providers via an OpenAI-compatible API and adds tagging, alerts, and observability for governance.

Key features of Edgee

Intelligent prompt token compression (up to 50% reduction)

Semantic preservation to keep intent and context intact

OpenAI-compatible gateway API for easy integration

Universal compatibility with OpenAI, Anthropic, Gemini, xAI, Mistral, and more

How to use Edgee

- Install via: curl https://test.edgee.ai | bash

- Create an API key and call the OpenAI-compatible send method with your model and input

- Add tags to requests to track spend by team, feature, or project